The AI Revolution in Drug Discovery: A Promise Caught in the Crossfire of a Data Blockage The pharmaceutical sector is at a crossroads. Due to a model that is becoming economically unsustainable, innovation has been stumbling for decades. We are caught in an unforgiving equation: the probability of success remains terrifyingly low, the timelines are getting longer and longer, and the cost of bringing a new drug to market is skyrocketing. Yet, on the horizon, a powerful new force promises to rewrite this equation entirely: artificial intelligence. AI is more than just a tool; it is a paradigm shift and a computational revolution that is on the verge of revolutionizing every phase of drug discovery and development. However, there is a catch. Despite its potential, this revolution is operating on inferior fuel. A fundamental data bottleneck, a crucial blind spot that restricts their power and perpetuates risk, is holding back the world’s most advanced algorithms. This report argues that the missing ingredient, the high-octane fuel required to power the next generation of pharmaceutical innovation, has been hiding in plain sight: the vast, complex, and criminally underutilized world of patent data. By understanding and harnessing this data, we can move beyond incremental improvements and finally unlock the full, transformative potential of AI.

Contents

Spiraling Costs, Plummeting Success, and the AI Imperative

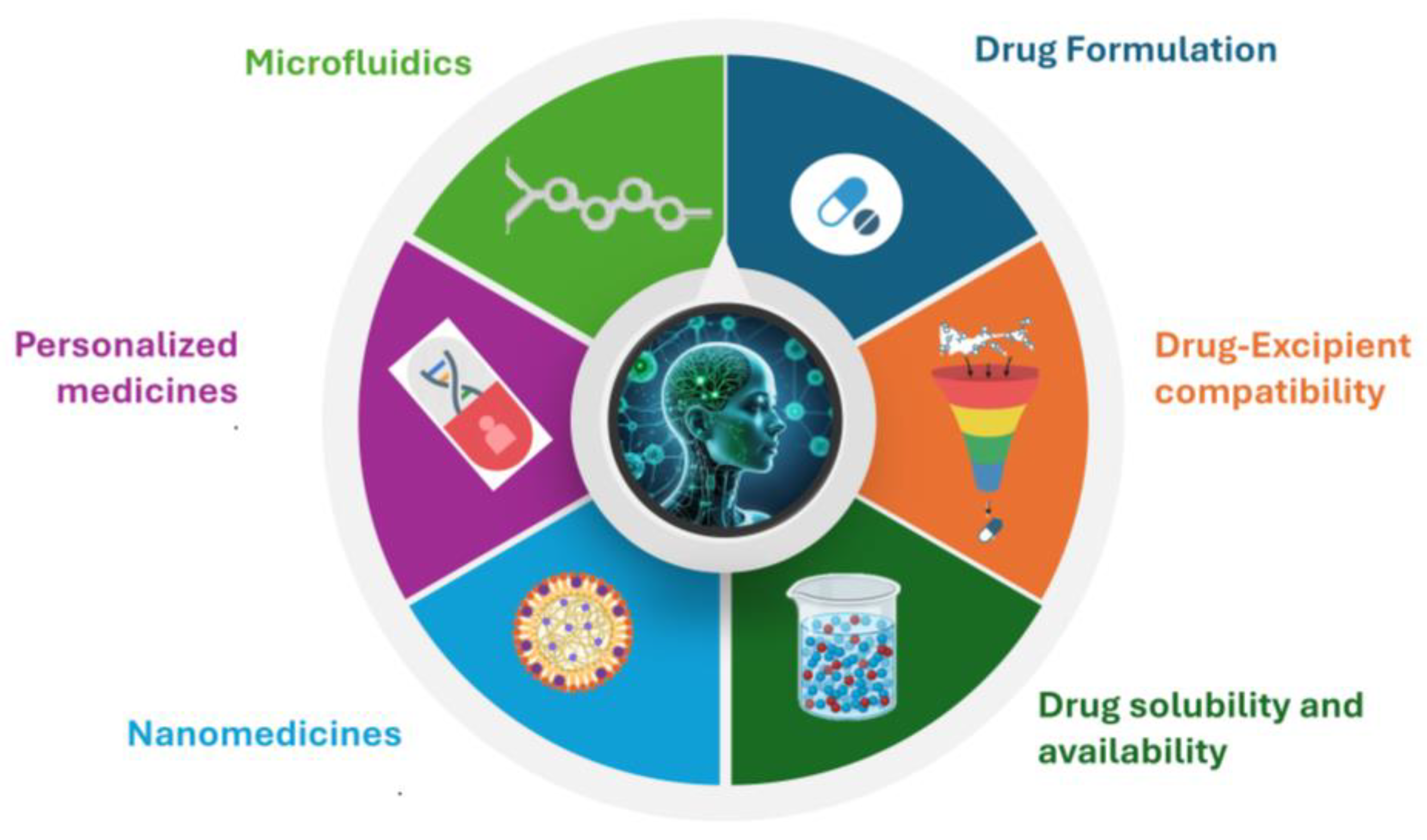

The Unsolvable Equation Let’s be brutally honest about our industry’s state. The traditional path of drug discovery is a long, arduous, and punishingly expensive journey. When you factor in the staggering number of failures that occur during the process, developing a single new medicine typically takes between 10 and 15 years and costs more than $2.5 billion. This is not a model that can be sustained. Target identification, hit discovery, lead optimization, and extensive preclinical and clinical testing are all part of the process, with failure being the norm rather than the exception. The statistics present a bleak picture. Only about 10% of drug candidates that enter Phase I clinical trials will ever receive regulatory approval from the FDA. This means that for every success story that reaches patients, nine others have consumed vast resources only to be abandoned due to safety concerns or a lack of efficacy. The industry is grappling with a profound productivity crisis. This decline in R&D productivity has pushed the internal rate of return on these massive investments to fall below the cost of capital, a clear signal that the old way of doing things is fundamentally broken, according to a recent analysis.5 In 2024, the success rate for drugs entering Phase 1 trials plummeted to just 6.7%, a significant drop from 10% a decade earlier. The development of artificial intelligence has been met with such fervent optimism precisely because of this. AI and its subset, machine learning (ML), offer a powerful antidote to the inefficiencies of the traditional model. The potential economic impact is staggering, with some projections indicating that AI could generate between $350 billion and $410 billion in annual value for the pharmaceutical sector by 2025. AI promises to accelerate timelines, reduce costs, and, most importantly, increase the probability of success1. This is happening right now, not in the distant future. AI is being deployed across the entire drug development pipeline. In early-stage discovery, algorithms are sifting through genomic and proteomic data to identify novel disease targets with unprecedented speed. For lead discovery and optimization, deep learning models like Graph Neural Networks (GNNs) and Transformers are predicting molecular properties, binding affinities, and toxicity profiles, allowing chemists to design better molecules faster. Major pharmaceutical players are all-in. To stay competitive, companies like Johnson & Johnson, AstraZeneca, and Pfizer are incorporating AI into their core R&D processes, forming strategic partnerships with AI-first biotechs, and building internal capabilities. The imperative is clear: embracing AI is no longer a choice but a matter of survival in the modern R&D landscape.

The “Garbage In, Garbage Out” Conundrum: When Sophisticated Models Meet Flawed Data

The field of AI-driven drug discovery is beset by a persistent and inconvenient truth: the performance of any AI model is fundamentally limited by the quality of the data it is trained on, despite the hype and genuine progress. We are building ever-more powerful computational engines—from complex GNNs that understand molecular structures to generative models that can dream up completely new chemical entities—but we are fueling them with incomplete, biased, and frequently commercially irrelevant data. This is the age-old principle of “garbage in, garbage out,” and it represents the single greatest bottleneck preventing AI from realizing its full, revolutionary potential. The challenges are systemic and multifaceted. Access to large, structured, trustworthy, and high-quality datasets is essential for the effectiveness of AI in drug discovery. However, the pharmaceutical data landscape is one of fragmentation, inconsistency, and inaccessibility. It is difficult to assemble the comprehensive datasets required to train truly powerful models because key data is frequently isolated within competing organizations and protected as proprietary assets. Poor quality, a lack of standardization, inconsistent metadata, and significant gaps or errors frequently plague public data, even when it is available. This is more than just a minor inconvenience; it has significant consequences. False positives that waste millions of dollars in subsequent wet-lab experiments or, even worse, false negatives that cause a promising drug candidate to be completely overlooked can result from poor data quality. One analysis aptly calls this a “vicious cycle,” in which models trained on compromised data lose accuracy over time, resulting in a significant waste of resources and a steady erosion of trust in the technology.14 The “black-box” nature of many advanced AI models adds to the problem. When a deep learning model makes a prediction, such as suggesting a novel compound or identifying a new target, it can be extremely challenging to comprehend. why it arrived at that conclusion. This lack of transparency and interpretability is a major barrier to adoption. If a medicinal chemist is unable to examine a molecule designed by an algorithm, how can they trust it? On the basis of a hazy prediction, how can a company justify an investment of one hundred million dollars? And how can regulatory bodies like the FDA approve a drug when the rationale behind its discovery is hidden within a complex, unexplainable algorithm?

This suggests that there is a significant strategic misalignment in the industry. Our data strategy has not progressed at the same rate as AI algorithms, despite the rapid and impressive maturation of AI algorithms. We spend a lot of money building engines that are stronger, but we mostly don’t care about how good the fuel is. The end result is a revolution that is always on the verge of breaking through, but its very foundation keeps it from doing so. The most significant competitive advantage over the next ten years will not come from having a slightly more advanced algorithm; rather, it will come from having a data source that is fundamentally superior, more extensive, and proprietary that no one else can duplicate. A new data paradigm is the missing ingredient, not a novel model architecture. Why Public and Clinical Data Aren’t Enough for Conventional Fuel We must first critically evaluate the limitations of our current fuel sources before developing this new data paradigm. Today, the vast majority of AI models used in drug discovery are trained using a combination of two primary types of data: clinical trial results and publicly available bioactivity data from academic literature. While both are undeniably valuable and form the bedrock of modern bioinformatics, they are, by their very nature, insufficient to power the kind of commercially focused, breakthrough innovation the industry desperately needs. Relying on them exclusively is like trying to navigate a vast, unexplored ocean with a map that only shows the well-known coastlines and a few major ports. Hidden reefs, deep-water currents, and undiscovered islands are the most significant features that are simply not charted. The Academic Archive: The Promise and Pitfalls of Public Databases

Open science is built on public databases like PubChem and ChEMBL, which have helped us all gain a better understanding of chemical biology. Before delving into their drawbacks, it is essential to acknowledge their fundamental function, which is a monumental achievement in data curation and accessibility. The Importance of PubChem and ChEMBL as a Foundation for Knowledge These open repositories are invaluable resources because they provide open access to millions of unique chemical structures and the bioactivity data associated with them.17 This information is meticulously and frequently manually curated from peer-reviewed scientific journals to create a rich knowledge base that links compounds to biological targets20. They enable the systematic investigation of structure-activity relationships (SAR), the identification of “tool compounds” for the investigation of biological pathways, and the investigation of potential off-target activities that might explain side effects or suggest new applications for existing compounds.